Tag: RoomAlive

-

Build your own holographic studio with RoomAlive Toolkit

With the current wave of new Augmented Reality devices like Microsoft HoloLens and Meta Glasses the demand for holographic content is rising. Capturing a holographic scene can be done with 3D depth cameras like Microsoft Kinect or the Intel RealSense. When combining multiple 3D cameras a subject can be captured from different sides. One challenge for achieving this…

-

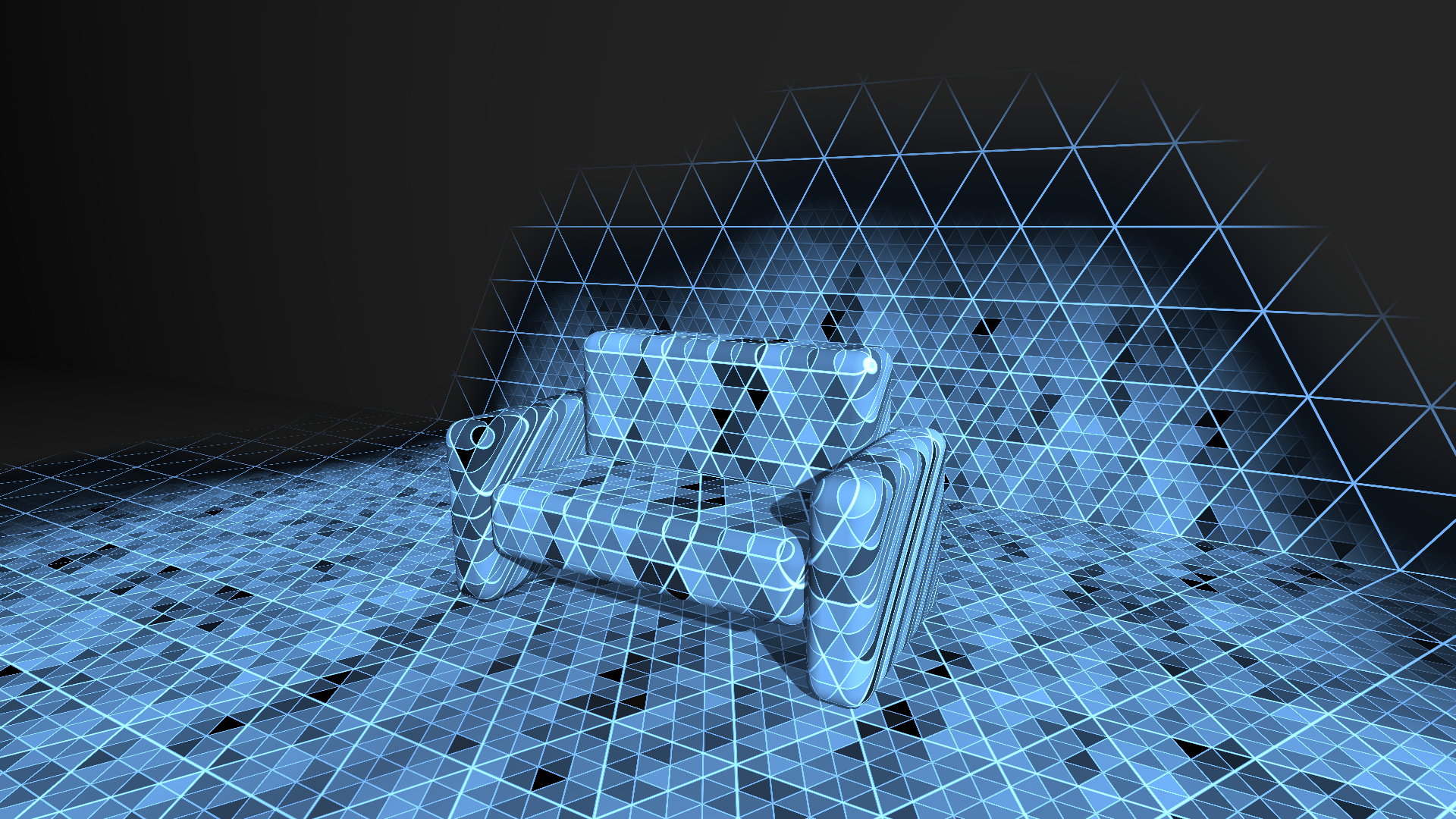

Rebuilding the HoloLens scanning effect with RoomAlive Toolkit

The initial video that explains the HoloLens to the world contains a small clip that visualizes how it can see the environment. It shows a pattern of large and smaller triangles that gradually overlay the real world objects seen in the video. I decided to try to rebuild this effect in real life by using a projection mapping setup that used…