Category: HoloLens

-

Building a holographic card with the MRTK Standard Shader

How to use the Mixed Reality Toolkit (MRTK) Standard Shader to create a holographic card with a stencil portal and iridescence.

-

Demos to install on your HoloLens 2

So you finally laid hands on a brand new HoloLens 2, but you discovered that there are only a few apps preinstalled on the device. Most of them are holographic versions of common Windows apps like Mail, Calender, Photos or Microsoft Edge and do not justice to the capabilities of the device. You run into…

-

Softening the HoloLens FOV border

To hide the limited Field of View of a Mixed Reality headset like the HoloLens or the Magic Leap you can fade out the holograms at the border of the view. I will discuss three possible techniques with different advantages and disadvantages. Note that all techniques have the same visual result in the HoloLens. However…

-

HoloLens scanning effect in Unity

In a previous blog post I talked about my attempt to rebuild the HoloLens scanning effect as shown in this video. After following the HoloLens Academy tutorials I decided to see how easy my existing shader could be integrated in Unity. It turned out that only a minimal amount of plumbing was needed. I took the project files from the HoloLens…

-

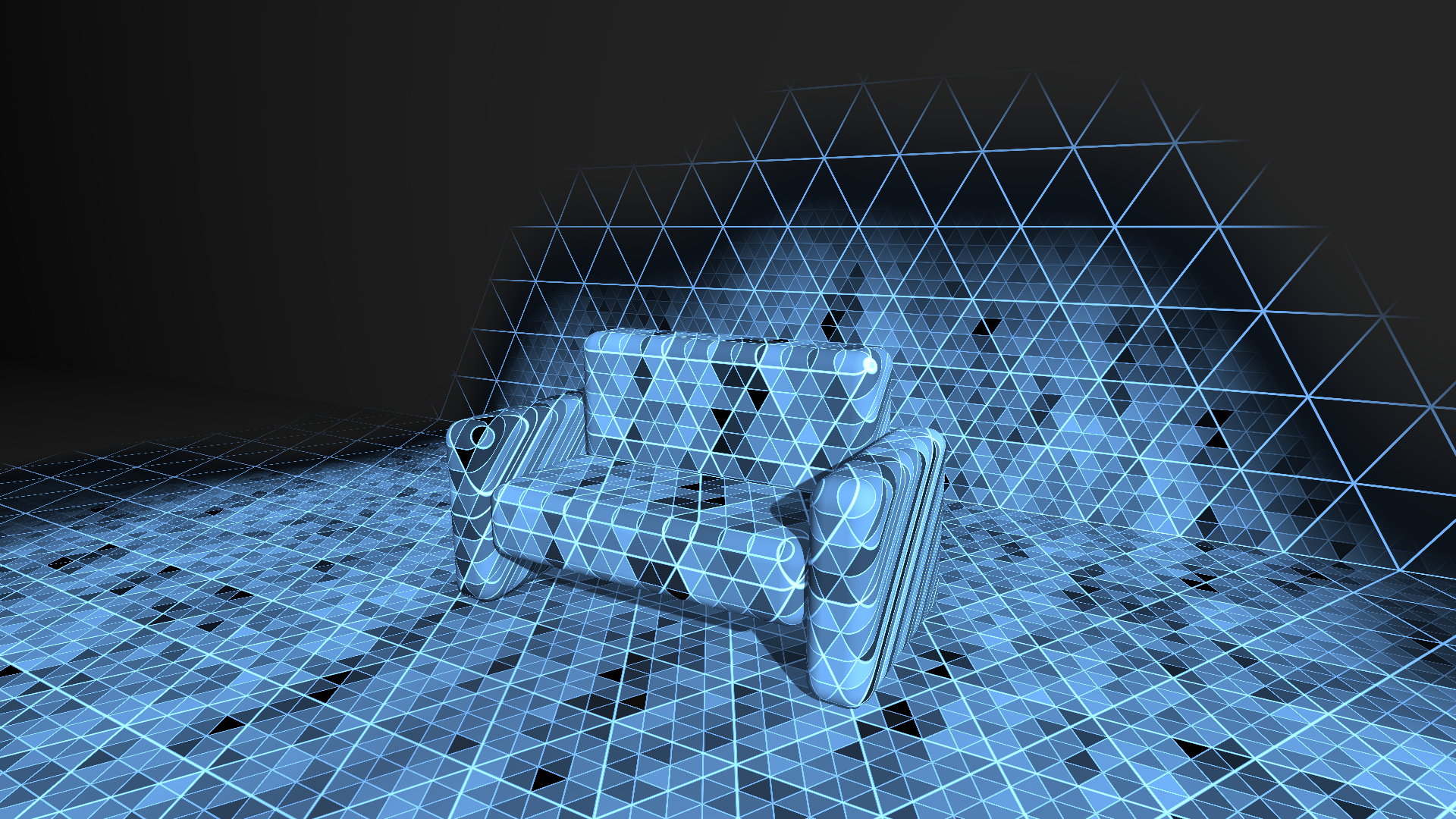

Rebuilding the HoloLens scanning effect with RoomAlive Toolkit

The initial video that explains the HoloLens to the world contains a small clip that visualizes how it can see the environment. It shows a pattern of large and smaller triangles that gradually overlay the real world objects seen in the video. I decided to try to rebuild this effect in real life by using a projection mapping setup that used…