Tag: Kinect

-

Interactive Luminous Carpets with Kinect

The boundaries between the digital and physical world around us are fading. Traditionally passive objects like mirrors, windows, coffee tables, clothes and even plants are now becoming interactive. One of the innovations in this area is Luminous Carpets. It is a carpet with embedded LED lights. The product is a collaboration between Philips and Dutch carpet maker Desso. Making it interactive…

-

Build your own holographic studio with RoomAlive Toolkit

With the current wave of new Augmented Reality devices like Microsoft HoloLens and Meta Glasses the demand for holographic content is rising. Capturing a holographic scene can be done with 3D depth cameras like Microsoft Kinect or the Intel RealSense. When combining multiple 3D cameras a subject can be captured from different sides. One challenge for achieving this…

-

Keep your magic a secret; hiding your Kinect V2

Like any good magician trying to keep it’s tricks a secret, developers of interactive installations should try to hide the technology of their installation as good as possible. This will keep your audience wondering and make your installation more magical. In this article I explore how the Kinect can be masked or hidden behind a Magic Mirror. Masking the…

-

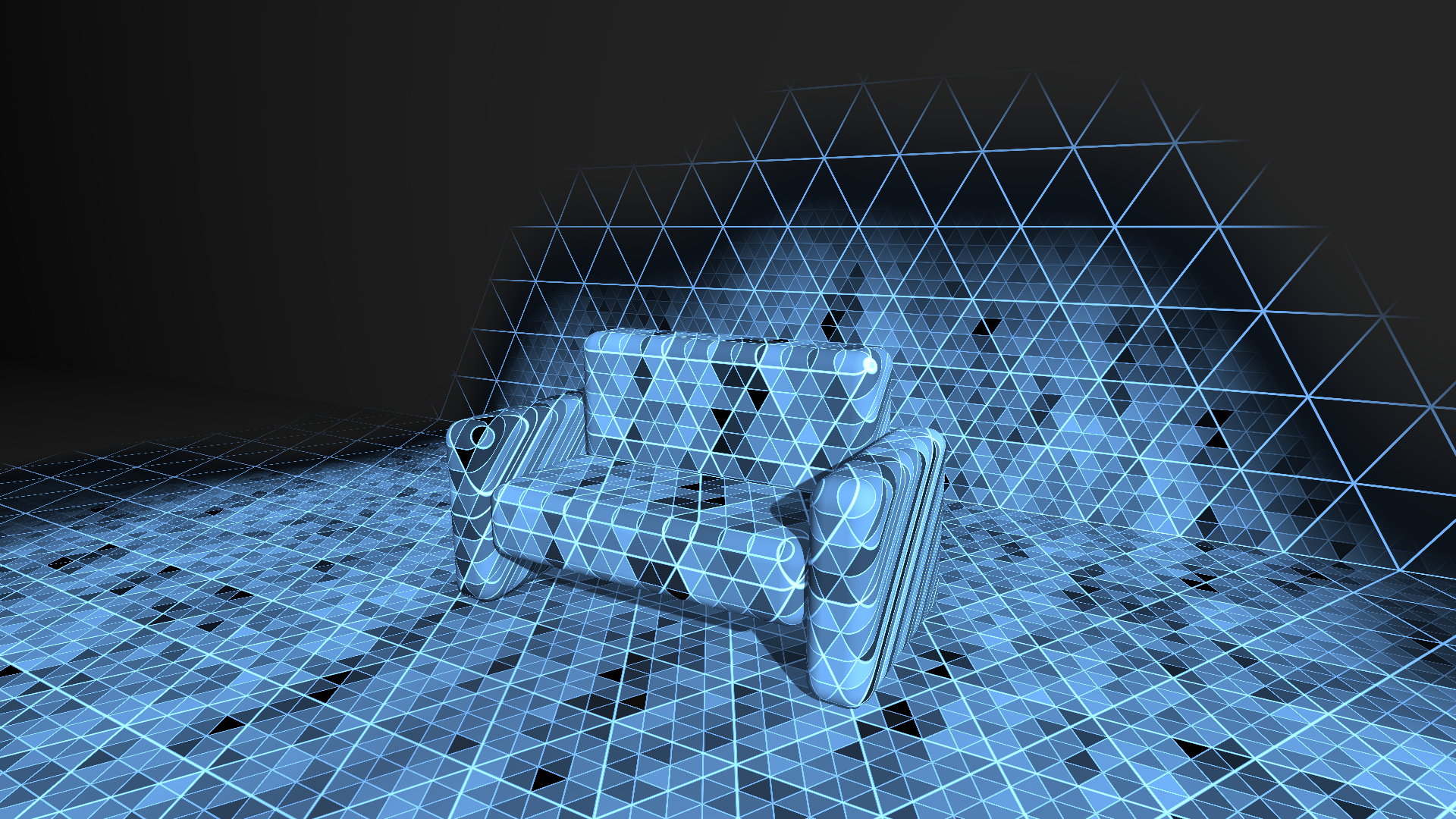

Rebuilding the HoloLens scanning effect with RoomAlive Toolkit

The initial video that explains the HoloLens to the world contains a small clip that visualizes how it can see the environment. It shows a pattern of large and smaller triangles that gradually overlay the real world objects seen in the video. I decided to try to rebuild this effect in real life by using a projection mapping setup that used…

-

Real life Portal; a holographic window using Kinect

The game Portal (released in 2007 by Valve) is known for it’s gameplay where portals could be used to teleport between different locations. Portals where rendered as virtual windows into the connected location with the well-known orange and blue rings around them. The game spawned a huge amount of memes, fan art and YouTube videos…

-

Kinect V1 and Kinect V2 fields of view compared

With the impending release of the new Kinect for Windows this summer, I took a closer look at the differences in Field of View between the old and the new Kinect for Windows. A well known improvement of the new Kinect for Windows sensor is the higher resolution of the image and depth streams. Part of the…

-

Live Kinect holography experiment

I had some fun together with my children and created a live holographic display. Kinect holography uses a technique commonly known as Pepper’s Ghost. It was invented more than 150 years ago and is often used in theme parks or museums. A recent trend is to use it for product displays with animated special effects.…