With the current wave of new Augmented Reality devices like Microsoft HoloLens and Meta Glasses the demand for holographic content is rising. Capturing a holographic scene can be done with 3D depth cameras like Microsoft Kinect or the Intel RealSense. When combining multiple 3D cameras a subject can be captured from different sides. One challenge for achieving this is the calibration of multiple 3D cameras so their recorded geometry can be aligned. In this article I will show you how I used RoomAlive Toolkit to calibrate two Kinects. The resulting calibration is used for rendering a live 3D scene. The source code for this application is available on GitHub.

Calibration with RoomAlive Toolkit

Calibration of multiple Kinects typically requires a manual process of recording a collection of calibration points. With RoomAlive Toolkit it is possible to automatically calibrate multiple projectors and Kinects. Since projectors are used to connect multiple Kinects during calibration a minimum of one projector is required. The only requirement is that the Kinects used can see a fair amount of the projected calibration images. When the calibration is done the projector is no longer needed.

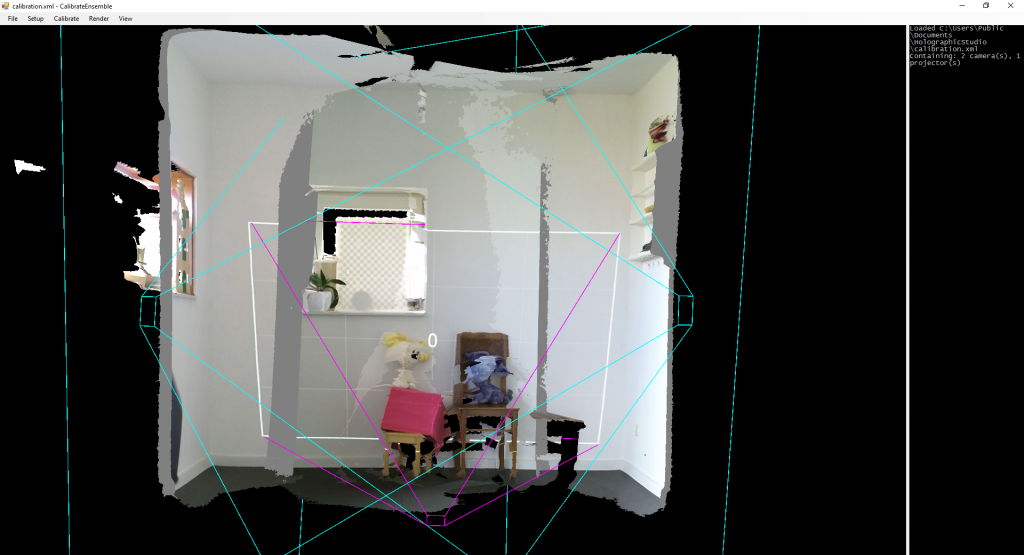

In the picture above you see the geometry and color image that was recorded by two Kinect V2’s. There’s an overlap in the middle. Two magenta frustums indicate where the Kinects were positioned and a purple frustum shows the position of the projector. Note that both Kinects where placed in portrait mode for better coverage of the room. I placed a few extra items to help the solver algorithm in the RoomAlive Toolkit solve the calibration.

Collecting data from multiple Kinects

Since each Kinect V2 needs to be attached to a separate PC we need a way to collect the depth and color data on the main system. RoomAlive Toolkit also contains a networking solution for doing that. Each system runs a KinectServer WCF service that provides the raw Kinect color and depth data. All data is updated as it arrives without further attempt for synchronization. This results in some artifacts at the seams of the captured scenes.

Rendering live Kinect data

The simplest form of rendering is rendering the raw pieces of geometry of each Kinect. RoomAlive Toolkit contains a SharpDX based example that shows how to do this. It also shows how to filter the depth and how to apply lens distortion correction. Using this information I built a new application based on SharpDX Toolkit (XNA-like layer) to do the rendering. The application contains a few extras so you can tweak a few of the rendering parameters at runtime. I added a clipping cylinder so you can easily reduce the rendered geometry to a single person or object as seen in the video. And just for fun I added a shader to give the live 3D scene a more cliché holographic look. More info about the parameters and controls can be found on the Holographic Studio GitHub page.

Other demos of real time video capture

Holoportation by Microsoft Research

3D Video Capture with Three Kinects by Oliver Kreylos

Leave a Reply

You must be logged in to post a comment.